Building large scale JavaScript applications in a monorepo in an optimised way — Part 1

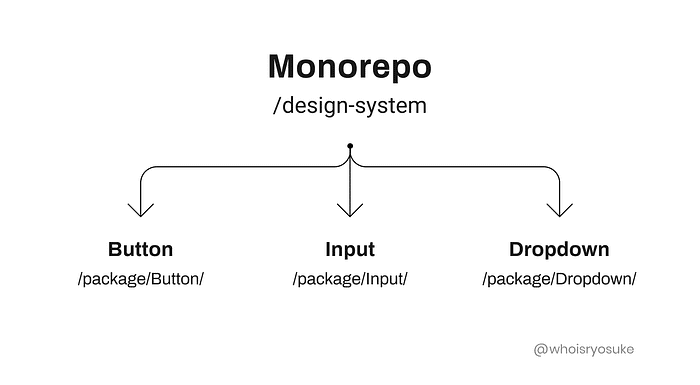

A monorepo (“mono” meaning ‘single’ and “repo” being short for ‘repository’) is a software development strategy where code for many projects is stored in the same repository. These projects may be related or logically independent and run by different teams. Common resources shared by all the projects can be defined as well.

Consider a multi-storey building where individuals perform different tasks on each floor independent of each other but use a Xerox machine on the ground floor accessible by everyone. This is a classic monorepo example.

I recently started working on design-system components and it is a monorepo, one stop show for all the UI components used across different products. It has a different package for every component and each component is used as a dependency across multiple projects.

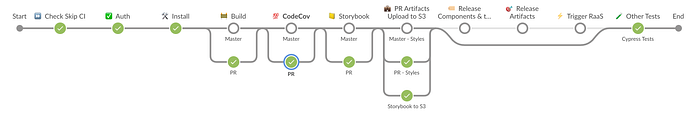

There are many advantages of a monorepo and I would like to emphasis on Unified CI/CD and Unified build process. You can use the same CI/CD process for every project in the repo and use a shared build process for every application in the repository.

As the number of projects in your monorepo increase, the time taken by the build process drastically increases impacting the developer productivity.

Attached above is a sample Jenkins user-defined continuous deployment pipeline with multiple tasks also known as stages configured as per the requirements of my project. The various stages defined are as follows —

-> Auth — At this stage, we establish the user who has made a commit that triggered the build process; fetch all the latest git tags, branch name, commit id etc., which we intent to use in the later stages and configure the git credential helper to point at the file with the user credentials authorised to post the build status to GitHub. This stage takes less than 10 seconds to complete.

Below is a sample code for reference —

stage('✅ Auth') {

steps {

sh '''

echo "https://${GITHUB_USER}:${GITHUB_TOKEN}@github.com" >> /tmp/gitcredfile

git config --global user.name "${GITHUB_USER}"

git config --global user.email "firstname_lastname@xyz.com"

git config --global credential.helper "store --file=/tmp/gitcredfile"

'''

sh 'git remote -v'

sh 'git fetch --tags'

sh 'git branch -a'

}

}-> Install — At this stage, you may want to install all the dependencies mentioned in the package.json. You may use yarn, npm or any other package manager that is convenient for you. I prefer yarn since installing packages is blazing fast when compared to npm.

stage('🛠 Install') {

steps {

sh 'yarn install --frozen-lockfile --verbose'

}

}This time taken for this stage to complete depends on the number of dependencies you need to install. Every time the build is triggered, all the dependencies are installed. To optimise this stage, you may cache the dependencies.

stage('🛠 Install from cache') {

steps {

sh 'yarn install --cache-folder ${WORKSPACE}/yarn_cache'

}

}When I’ve tried this, I ran into race conditions. I got a lot of builds with corrupted modules as a result. Caches do have race conditions when we deal with multiple jobs. Especially, when you are dealing with a monorepo where multiple developers contribute to multiple projects at the same time, one build could write to the cache while another build simultaneously reads from the cache, or two builds would write to the cache simultaneously, etc. Proceed to cache dependencies when you are working on a project that requires triggering the builds sequential.

-> Build — The monorepo I’m working on has 145 projects and receives contributions from developers spread across multiple global cross functional teams. This requires the developers to re-base their code often which triggers a new build job every time. The build stage alone took ~12–15 minutes to build all the projects. We use lerna, a tool to manage multi-package repositories.

// command to run the build

// References- https://github.com/lerna/lerna/tree/main/commands/run

// https://www.npmjs.com/package/@lerna/filter-optionslerna run build --stream

In order to optimise this stage, it is required to do an incremental build i.e., to just build the projects that are modified since the previous release.

lerna run build --stream --since $(git describe --tags --abbrev=0));We also have a multiple internal dependencies between the projects and so when one project is modified, it is necessary that we build all the dependents and dependencies that would be impacted because of the change. For example — if a button component is modified, it is necessary to build any Higher-Order components that uses the button component internally.

toposort option helps to sort the packages in topological order i.e., build dependencies before dependents instead of lexical by directory.

lerna run build --scope <my-component> --include-dependencies --include-dependentsThe above command will override the — since option. So, I have added a simple script to run just the dependencies and dependents of the modified components.

stage('🚧 Build') {

stage('PR') {

steps {

sh '''#!/bin/bash

echo "Starting yarn build"

for dependency in $(lerna ls --toposort --include-dependencies --since $(git describe --tags --abbrev=0));

do dependencies+=(${dependency});

done;

scope=${dependencies[*]}

lerna run build --scope $scope || exit 1

'''

}

} The optimised build takes ~ 0–4min; finishes in no time when no packages are modified, say you are just updating change logs or adding docs or adding test suites etc., and in the worst case takes ~4 min to build just the most complex project modified in my repository. Thus ~70% reduction in the build time!!

-> Build parallelisation — It is important to note that any pull request needs only the modified packages to be built but the master/release branch has to build all the projects to ensure sanity.

Jenkins offers a way to parallelise the stages. You may use the below script for reference.

stage('Something') {

parallel {

stage('Something - Part 1') {

// Do something

}

stage('Something - Part 2') {

// Do something

}

}

}Do checkout part 2 of this post where I will share about ways to optimise the testing stage of the development lifecycle.

Do give a shout out to my mentor, Ravi Teja Channapati, Principle Engineer, Intuit.